Architecture

Pipeline of our approach.

The pulp-and-paper manufacturing sector necessitates stringent quality control measures to guarantee a contaminant-free end product, suitable for various applications. A vital quality metric in this industry is the fungal spore concentration, which can severely affect the paper's usability if present in excessive amounts. Current techniques for assessing fungal spore concentration involve labor-intensive lab tests, with results taking up to 1-2 days. This delay hinders real-time control strategies, emphasizing the need for precise real-time fungal spore concentration predictions to maintain exceptional quality standards. This study showcases the outcome of a data challenge focused on devising a method to predict fungal spore concentration in the pulp-and-paper production process utilizing time-series data. We propose a machine learning framework that synthesizes domain knowledge, based on two crucial assumptions. Our optimal model employs Ridge Regression with an alpha value of 2, achieving a Mean Squared Error (MSE) of 2.90 on our training and validation data. The following section discusses the data and insights obtained from the original dataset.

Competition Condition: To predict the target value for a timestamp t1, you cannot use data with timestamp > t1.

Pipeline of our approach.

Data preprocessing:

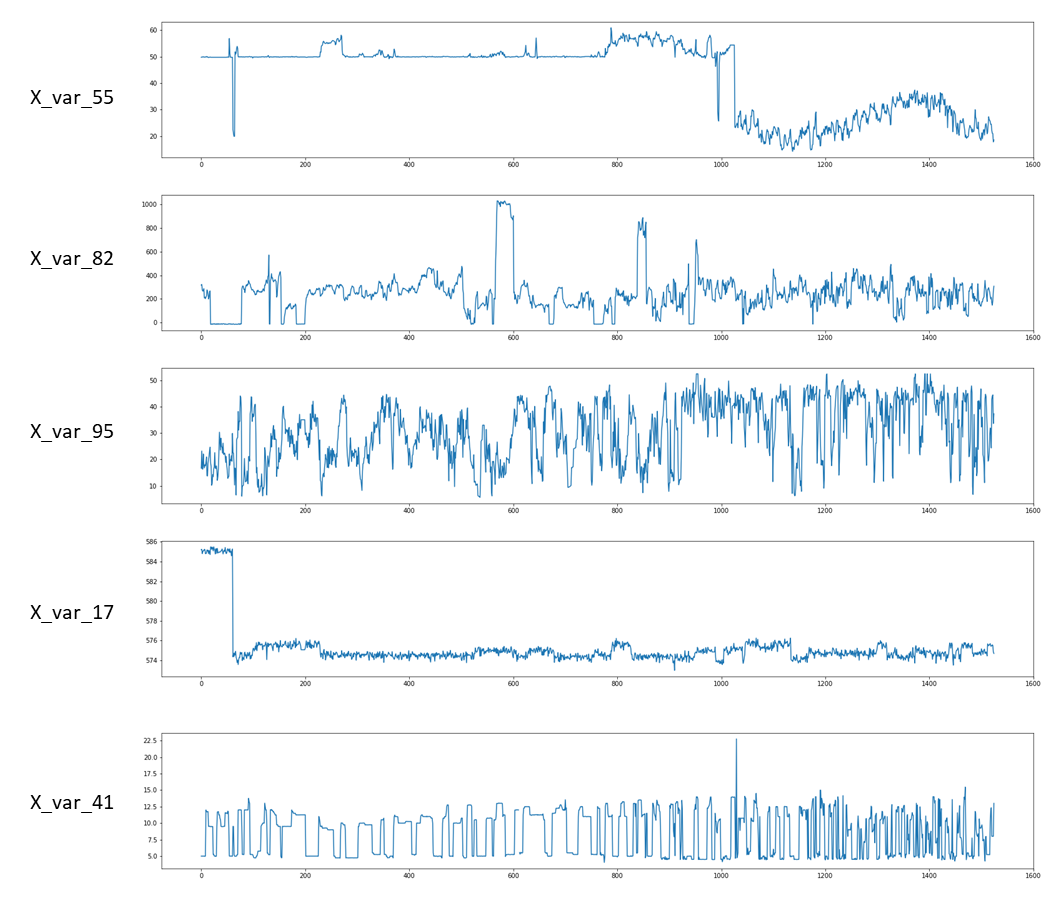

In this study, we employed timestamp as the index to regulate temporal dependence in our model. To address missing data, five columns with 965 instances were excluded, while the mode was utilized to impute values in a single column. Preprocessing entailed standardization for numerical attributes and onehot encoding for categorical variables

Train-validation Splitting:

To evaluate model performance, we devised a distinctive data partitioning strategy. Initially, the first 40% of the dataset was allocated for training, ensuring sufficient data availability. Subsequently, the remaining 60% was divided into validation and training subsets, which were merged with the previous partition. This approach emulates the original problem, providing ~750 initial data points for model training.

Feature Selection:

To mitigate noise, feature selection techniques were employed, including Principal Component Analysis, Random Forest Regressor, and SelectKBest, enabling identification of the most relevant features and enhancing model validity

Model Selection and Training:

We conducted experiments with prevalent machine learning algorithms, including Linear, Ridge, and Lasso regression, Random Forest, Gradient Boosting, and Adaptive Boosting. A constraint required training only on data up to t for predicting t+1. To accommodate this, model parameters were stored in a binary serialization file, enabling efficient parameter updates without retraining on the entire dataset.

Prediction Synthesizing the Domain Knowledge:

From Analyzing the target variable of the training data, we came to two assumptions which we are referring as domain knowledge. The two assumptions are: I. All the "y_var" are the multipliers of the 5. Our assumption is the measurement scale has the precision of 5. II. As the concentration cannot be negative so we have chosen any negative prediction as zero.

Upon experimenting with training data, partitioned into training and validation sets that emulate the original testing dataset, we observed the following results. Using all available variables as input, alongside selected features via algorithms such as Random Forest, PCA, and SelectKBest, Random Forest demonstrated superior performance in terms of MSE and MAE. Notably, the cleaned and preprocessed original dataset outperformed feature-selected versions. A comparative analysis between the full data and Random Forest models, with respect to MSE and R-square, is provided in this figures. Among the Machine Learning algorithms, Ridge regression was selected due to its superior performance compared to other algorithms, including Linear Regression, Adaptive Boosting, Gradient Boosting, and Extra Trees Regressor.